M+E Daily

DigitalFilm Tree and ETC@USC Dig Deep Into Virtual Production

Story Highlights

When the Entertainment Technology Centre at the University of Southern California (ETC@USC) set out to produce Ripple Effect, an R&D short film testing virtual and remote production, the initiative ended up providing a real-world pressure test of the critical steps required in restarting and continuing film and TV productions in a new world due to the COVID-19 pandemic.

The project was produced in conjunction with the USC School of Cinematic Arts graduate students and alum, and with the assistance of technology firms including DigitalFilmTree.

Speaking during the Innovation presentation “’Where’s the Beef?’” ETC Digs Deep into the Meat of Virtual Production” May 12 at the annual Hollywood Innovation and Transformation Summit (HITS) Spring event, Greg Ciaccio, production and post technology design at ETC@USC, noted that the “underlying theme through the entire project of Ripple Effect was obviously COVID and safety.”

Speaking during the Innovation presentation “’Where’s the Beef?’” ETC Digs Deep into the Meat of Virtual Production” May 12 at the annual Hollywood Innovation and Transformation Summit (HITS) Spring event, Greg Ciaccio, production and post technology design at ETC@USC, noted that the “underlying theme through the entire project of Ripple Effect was obviously COVID and safety.”

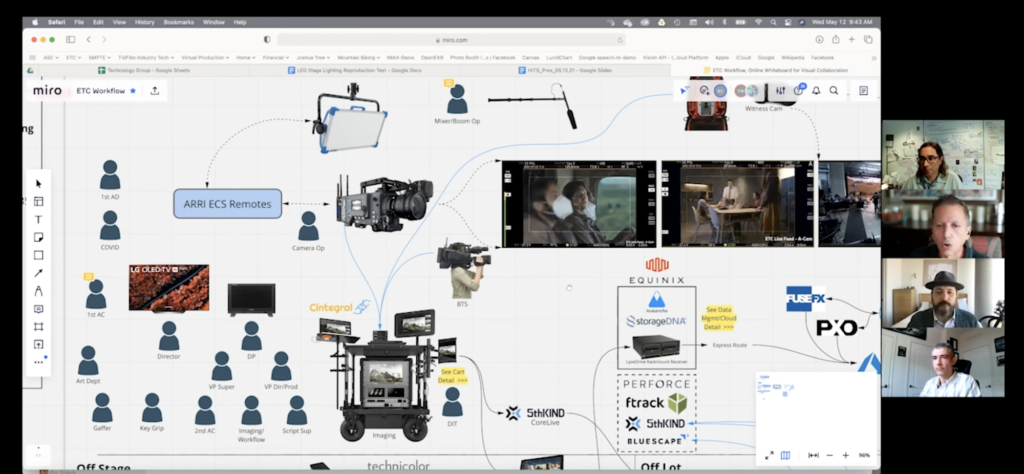

One large goal of the remote production and virtual production sides of the project was to “minimise” the crew needed to be on the set at any given time, according to Ciaccio.

When DigitalFilm Tree started working on Ripple Effect, “I had not heard of the term SafetyViz,” Ramy Katrib, CEO and founder of that company, told viewers.

“Essentially the idea was to engender safety protocols on set at a time where essentially there was a shutdown in production [and] it was very challenging for people to get back on set,” he explained.

Before the project, his company had dealt with what was called previz and TechViz for a couple of years, he said. “The whole idea there was allowing productions, especially TV productions that were not used to virtual production, [to have] the ability to plan out their scenes and their shots,” he explained. That was accomplished via “blocking with avatars which represent the actors and essentially start to story visualise the scenes and the production you will shoot in the physical world,” he added.

SafetyViz was quite similar to what was done with previz and TechViz in that there is a 3D scan of the set that is done “so you’re able to peruse it, and we had already done a Lidar scan of the XR Stage, where Ripple Effect was shot, so we leveraged that and started building out essentially story visualisation scenes, just like you would do a creative scene where an actor moves from point A to point B” and the director of photography (DP) captures that scene, he explained.

“We essentially then visualised the entire set – not just who’s in front of the camera but all the people that were scheduled to be on set like, behind the camera, the camera department [and] production design,” he said.

Meanwhile, the “new normal is we have a safety COVID officer who’s also on set,” he noted.

Zoom Played a Role Too

Zoom Played a Role Too

“So we built out the environment with all the people virtually that were about to go there and the benefit that provided was for all the department heads to plan what they were about to do before they went to the physical set, which was XR Stage,” he told viewers. That was done via Zoom sessions, “just like this,” he said with a smile, referring to the HITS session he was speaking in.

“We would share our screens and then show them the environment and, in some cases, some of the behind-the-camera and in-front-of-the-camera folks had never been to the location,” he pointed out. “So right away it provided everyone context [and a] lay of the land” for those people, he said.

In the Zoom sessions, “we started customising the environment once the COVID officer started seeing essentially challenges, like ‘we need to spread this out [so] the camera department should be here’; ‘this is the viewing station,’ ‘this is where wardrobe and makeup [are]’; ‘this is where the sanitation station is,’” he explained. “We went on to essentially create pathways of where certain departments, certain personnel were to traverse and also hang out, and that was all done before everyone got to set.”

One handy tool that was created for the project was a ring light at the bottom of each avatar, he noted. If the DP got too close to somebody else, the “ring lights would turn red if they were less than six feet” away from each other, he said, adding: “What that did is gave you a sense of the spatial and the measurements of how the set was so that you can plan that out in advance.”

There were also “simple things like measurement tools” if somebody wanted to know the distance between one wall and another wall, he noted, adding: “You’re able to measure the entire environment virtually over a Zoom session so you can make sense of how you’re going to essentially plan the production out.”

There was an “a-ha moment” while going through the planning when the safety officer said: “This just isn’t going to work. There’s just not the room or the space to keep people apart,” recalled Erik Weaver, director of adaptive production and special projects at ETC@USC.

“That’s when we started revising the virtual environment in real time, well before people got to set,” Katrib noted.

Noting that ETC is a non-profit think tank that reports to the CTOs of most of the major Hollywood studios, Weaver explained: “We look at innovative things and what’s coming around the corner and… a big thing that several of the studios came to look at was virtual production.”

Looking to the Future

The first question that anybody may ask, according to Weaver, is: Why should I care about virtual production?

The reason is that “right now we’re looking at almost 200 stages going up this year, 2021 – that represents roughly somewhere between” $700 million and $800 million in spending, Weaver noted, predicting: “This is going to create a mass exodus towards this particular technology.”

This technology helps in everything from taking an entire crew on location to Tunisia to just creating simple shots of bedroom windows, he pointed out, adding: “It’s not just one technology. I think that a lot of people get confused and think that virtual production is just Mandalorian style [thing] or these monster LED volumes that cost” $4 million to $5 million to build.

This technology helps in everything from taking an entire crew on location to Tunisia to just creating simple shots of bedroom windows, he pointed out, adding: “It’s not just one technology. I think that a lot of people get confused and think that virtual production is just Mandalorian style [thing] or these monster LED volumes that cost” $4 million to $5 million to build.

He made another prediction: “This is really going to catapult as a major kind of shift in the way a lot of things are done.” Therefore, “being aware of this or studying a lot of this is very important,” he said.

Explaining why the 3D Lidar scan was important, Katrib said: “It’s critically important for certain people like the camera department,” the director and the actors “to know that when you set up a virtual camera… It provides so much insight on what is usually assumed, especially if a director or DP has never been to the environment.”

It is an “epiphany” for them when they realise it is accurate and that creates confidence, which is “why it’s so critical,” Katrib added, noting it is a “practical benefit” for others working on a film. What he has found is that once the director, DP and often the visual effects supervisor “get acclimated, it becomes rather natural,” he pointed out.

“The amazing development over the last year is now we’re touching 12 other departments because this kind of benefit gets out [and now] production design is starting to get into the fold because they often times have sketch-up models [that are] relatively accurate – but they’re not Lidar, Katrib said.

Weaver offered viewers a suggestion: “As you begin to build something like TechViz or previz models, one of the things you really need to understand for in-camera visual effects is that you need to begin to shift your budget. Your budget needs to go from traditional VFX and move to [a] new virtual art department,” where pre-planning must be done long in advance.

Noting that he served as production supervisor on Ripple Effect, Rainer Gombos, co-founder of Realtra, said the project did not have a huge production budget or a lot of time for pre-production.

“When you do a virtual production, you want to marry, of course, the real world set with the virtual set that is right behind it and separated by the LED wall and you want to have an invisible transition line… to make sure that the assets you have in front of the LED walls look just like the assets behind it,” Gombos explained.

“When you do a virtual production, you want to marry, of course, the real world set with the virtual set that is right behind it and separated by the LED wall and you want to have an invisible transition line… to make sure that the assets you have in front of the LED walls look just like the assets behind it,” Gombos explained.

Concluding the session, Ciaccio said: “We started out with a large stage and our crew adapted to the size of the stage and then we moved into Lux Machina, which is a smaller stage, and, for safety reasons, we had to take our crew of about 25 to 30 and bring it down to about 10 to 12. So that was a little bit of a challenge.” Technicolor, meanwhile, processed all the dailies remotely, he noted.

To view the entire presentation, click here.

HITS Spring was presented by IBM Security with sponsorship by Genpact, Irdeto, Tata Consultancy Services, Convergent Risks, Equinix, MicroStrategy, Microsoft Azure, Richey May Technology Solutions, Tamr, Whip Media, Eluvio, 5th Kind, LucidLink, Salesforce, Signiant, Zendesk, EIDR, PacketFabric and the Trusted Partner Network.