HITS

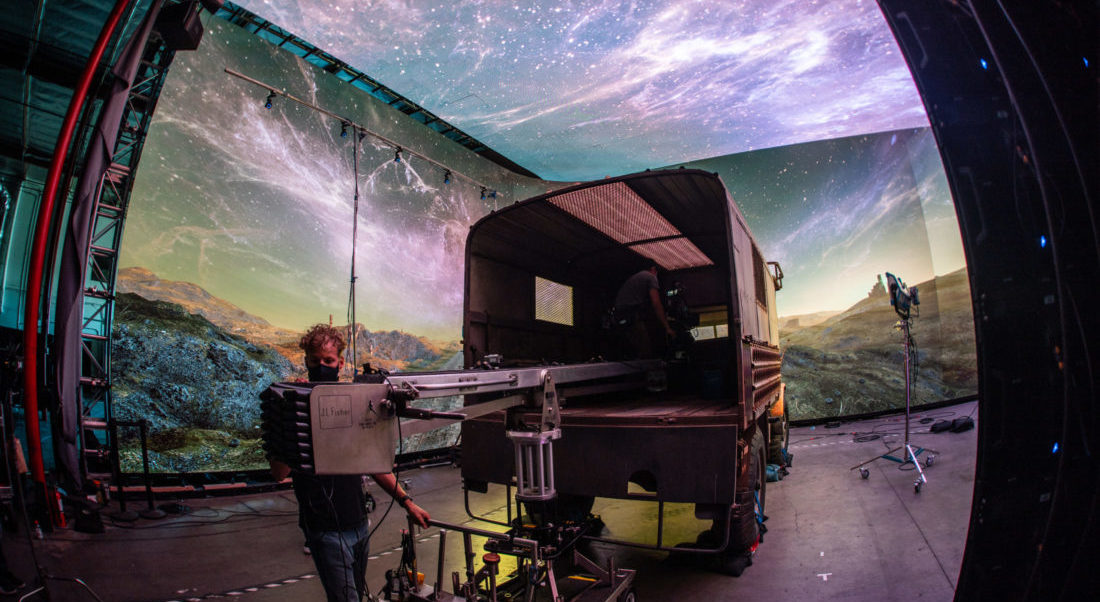

The Incredible Machines Bringing XR Stages to Life

Story Highlights

When you’re annihilating evil forces in a video game world, a single computer or console can get the job done.

When you’re creating an entire extended reality environment on a stage using a game engine, you need something a little more powerful.

OK, a lot more powerful.

Here Tankersley explains the mighty hardware components at the aptly named XR Stage in Los Angeles, where ICVR’s short film Away and many other creative visions have come to life.

Setting the Stage for Extended Reality

Walk onto an XR stage in action and you might find yourself anywhere in the world — the real one or an imaginary one.

In fact, one of the biggest advantages of shooting on an XR stage, whether you’re filming a major motion picture or a 30-second commercial, is that you can create whatever setting you like without travel time and expense.

And you can change that setting in a fraction of the time it would traditionally take.

How all of those 3D assets are rendered and filmed to look so realistic involves, as you might imagine, not just powerful machines but an advanced architecture that allows them all to work together.

Let’s start with the physical components.

An LED volume, the heart of the XR stage, has a single curved wall and a ceiling.

Each of those segments may have as many as a hundred LED panels and is rendered by a single computer, called a render node, which looks like a rack-mounted server.

All of the computers for all of the segments are precisely synced to work together.

The LED volume for Away had four segments on the wall and two on the ceiling, for a total of six render nodes.

Need for Speed

“It’s like a real-time render farm,” Tankersley said, referring to all the computers working together to render the graphics. “In a traditional production, it’s an offline process. You have your models, whether it’s a spaceship or a monster or whatever imaginary thing you’re trying to create in the computer, and the computer can draw it overnight. But on an LED stage, the computer has to draw it 60 times a second, because it’s live.”

So why can’t you just hook up a bunch of gaming PCs with big GPUs to the LED wall segments?

“Those machines aren’t built to do this kind of job,” Tankersley said. “And they’ll overheat or melt down. You need a machine that can run at full speed all day long and have reliability and redundancy.”

Getting in Sync

Render nodes are just one piece of the virtual production puzzle.

Silverdraft built a new high-performance rendering architecture that feeds every piece of data into a cohesive whole.

This includes the motion-capture system (which tracks the camera and anything else physically on the stage) and wall-geometry mapping in addition to the 3D assets and LED segments.

Information from all of these components has to be processed in real time and rendered as the scene plays out, pixel by pixel.

“You can’t afford to drop a frame,” Tankersley says. “You can’t afford to slow down the render. You can’t afford to shut down the production because the computer isn’t working or because there’s a little frame drop when you pass by a particular object…. You really have to design the computer architecture for the stage to be as fast as possible and never slow down. It has to be running at full speed all the time.”

But we’re not done with all the machines yet.

First, any change in a scene means recalculating the lighting, which is called baking, so there’s often a computer just for the light-baking system.

For Away, ICVR also used a DMX lighting controller to match the standalone lighting with the LED wall lighting in real time.

Second, any changes to a data set — for instance, adding detail to a 3D asset — can require work by several people, which means several artist workstations are needed.

And third, you need cameras and a camera tracking system.

Shooting for the Stars

Two types of cameras are used in all virtual productions in LED volumes.

One is a virtual camera inside the game engine. It handles location scouting inside the virtual world, in-engine shot blocking, previsualization (aka “previs”) and techvis.

Then you need a camera tracking system connected to all of the other components (on-set camera, LED wall, render nodes and so on). For Away, ICVR used RedSpy by Stype.

Here Comes Troubleshooting

It’s rare for even a nonvirtual film shoot to go off without a hitch; issues can crop up related to everything from equipment breakdown to actor meltdown.

Since virtual sets have all that extra technological complexity, troubleshooting is to be expected.

Here are three common issues.

Seams. Remember how the LED wall is made up of multiple segments, and how all the computers have to work together to project a single scene onto them?

“Each of those segments has a different geometry, because the wall is typically curved,” Tankersley said. “So the computers also have to understand the specific curve of the wall and where the segments meet.” If the software picks up any errors in the geometry, “you’ll see some weird artifacts on the wall.” Artifacts include a range of undesirable visual elements.

Syncing. “All of the screens have to be synced to the [camera] frame so that the camera and the wall are in sync for every frame, so you don’t get any flicker,” Tankersley said. “If the sensor on the camera is running on a slightly different clock to the wall, you’ll see artifacts.” How precisely do all the sensors and clocks have to be synced? “To the microsecond.”

Camera angles and proximity. “LEDs have a color shift on different angles…you see this with any TV,” Tankersley said. “If you look at it straight on, the colors are right, and if you look at an angle, they’re off. So you have to pay attention to the angle of the camera to the wall.”

Also, if the camera is too close to the wall, you’ll start to see the individual pixels. Color matching can be an issue as well.

Troubleshooting any of these issues might take some time, of course.

But overall, the time saved by shooting a production virtually versus traditionally more than makes up for it.

Plus, when it comes to setting, not even the sky is the limit on an XR stage.

Thanks to powerful computers like Silverdraft and advanced tools and technologies like ICVR’s, your location can be anything from inside an atom to the farthest reaches of the imagination.

* By Elena Vega, ICVR