M+E Connections

AppTek: AI and ASR are Enhancing Captioning and Subtitling

Story Highlights

Artificial intelligence (AI)-powered advancements in automatic speech recognition (ASR) and neural machine translation (NMT) technologies are transforming workflows within the media and entertainment industry, especially when it comes to live captioning and subtitling, according to Dr. Volker Steinbiss, managing director of AppTek.

“Believe it or not, I could have given a presentation with exactly the same title in 1993, when Philips launched a medical dictation workflow system in the radiology area,” he said Nov. 5 during the online Smart Content Summit EU event.

At the time, he was working in research at Philips Speech Processing, he pointed out during the AI & Metadata breakout session Accelerating Workflows with Language Technology.”

“The radiologist could do dictation without any change in their working habits before the recorded translations were transcribed by an automatic system and then it went to a typist,” he recalled, noting there were “three different locations” involved.

“The radiologist could do dictation without any change in their working habits before the recorded translations were transcribed by an automatic system and then it went to a typist,” he recalled, noting there were “three different locations” involved.

“Speech recognition was not as good as it [is] now,” he said, adding: “The technology was far weaker but the product was good and successful anyway in 1993 because it was super dedicated.”

Language technology can help in media and entertainment also, he said, moving on to discuss seven M&E use cases for speech recognition, starting with what he called “the most obvious one”: Automatic subtitling.

But automatic subtitling is not that simple, he said, noting that, “first, the quality needs to be perfect, so you better have a human in the loop — someone who does post editing – [and] you have to have a good workflow to do that, and you have to not use any off-the-shelf” speech recognition solution.” AI is used, he pointed out.

The second use case he pointed to was translating subtitles from a template with a little help from machine translation. “We try to understand the pain points and try to find technical solutions… so we improve continuously and it’s super important to get the feedback and to build it into the whole machinery,” he said.

The third use case he pointed to was live closed captioning that he noted is fully automatic, adding: “Subtitles have to be created on the fly.” A positive sign is that “automatic systems are hitting the 98 percent threshold, so that’s rather good,” he said, referring to accuracy.

The fourth use case he pointed to was the “full pipeline” in which ASR and MT are used for gisting purposes. The fifth use case was audio alignment and the sixth use case was leveraging metadata for translation.

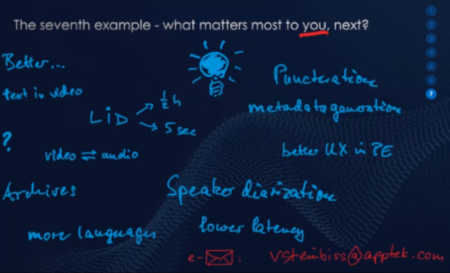

Concluding with the seventh use case, he said what matters to clients would be next, asking viewers to share ideas on what application the company should turn to next.

Concluding with the seventh use case, he said what matters to clients would be next, asking viewers to share ideas on what application the company should turn to next.

Click here to access the full presentation.

Smart Content Summit EU was produced by MESA and MESA Europe, in association with CDSA, HITS and the Smart Content Council, which meets regularly to share best practices, evaluate emerging technologies and collaborate to accelerate the pace of transformation in our industry.

The summit is sponsored by ATMECS, Cognizant, Deluxe, Digital Nirvana, Éclair, Eluvio, EIDR, Iyuno Media Group, TransPerfect, NAGRA, Premiere Digital, Zixi, Whip Media Group, AppTek and EIDR.